Nice to meet you. I’m a researcher, software developer, photographer, and musician living in Darmstadt, Germany.

I’m deputy head of the competence center for Spatial Information Management at Fraunhofer IGD and a lecturer at the Technical University of Darmstadt. I have a PhD in Computer Science. My research interests are in processing of big geospatial data, workflow management, distributed systems, and cloud computing.

In my spare time, I’m a passionate musician and photographer. I also work on several open-source projects and contribute to popular frameworks and libraries. Feel free to contact me if you want to know more.

Notoriously curious me exploring the world.

I was given the opportunity to present my paper about capability-based scheduling in the Cloud at the DATA conference 2020. I talked about Steep’s software architecture and its scheduling algorithm.

A question I was once asked (“Is Cloud Computing ethically justifiable?”) led me to create this presentation about Smart Cities that use Cloud Computing to make the urban environment more livable.

A tutorial I gave at the Web3D 2014 conference in Vancouver, BC, Canada. I presented different tools to prepare and visualize big 3D city models in the Web. The slides start with a motivating example but the largest part of the talk was live coding.

In this presentation that I gave at the Utility and Cloud Computing Conference UCC 2014, I talked about a novel user interface based on Domain-Specific Languages (DSLs) that allow domain experts to harness the capabilities of Cloud Computing.

These are the things I do.

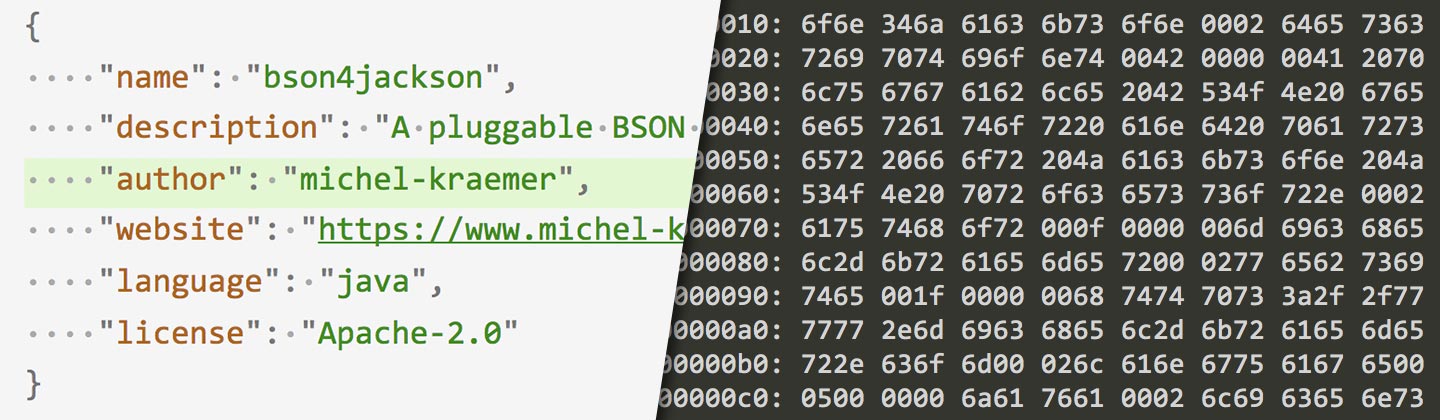

This library adds BSON support to the Jackson JSON processor. BSON is binary encoded JSON. Read my tutorial for more information.

This plugin adds a Download task to Gradle that displays progress information just like Gradle does when it retrieves an artifact from a repository.

citeproc-java is a Citation Style Language (CSL) processor for Java. It interprets CSL styles and generates citations and bibliographies, for example, from BibTeX files.

Steep is a Scientific Workflow Management System I’m working on at Fraunhofer IGD. It has been a core part of my PhD thesis. In 2020, we decided to release it under an Open-Source license.

I’m the official maintainer of the Vert.x website. Vert.x is a tool-kit for building reactive applications on the Java Virtual Machine.

GeoRocket is a high-performance data store for geospatial files. It’s a project I work on with my team at Fraunhofer IGD.

Spamihilator is a free Anti Spam Filter that works between your email client and the Internet and examines every incoming message. Useless spam mails (Junk) will be filtered out.

Besides the open-source projects I actively maintain, I also contribute to various other repositories. Visit my profile on GitHub if you want to know more. Please also consider sponsoring my work.

In my other life, I’m a passionate photographer.

I love travelling, hiking and exploring. My constant curiosity has led me to some incredible places on Earth—mostly in Europe and in colder, northern areas of the planet. I mostly do landscape photography but I often also visit beautiful cities.

My bucket list is long (maybe too long) and I try to roam the world as much as I can. Staying outdoors in the nature and experiencing photography adventures has become a part of myself that I cannot imagine to lose.

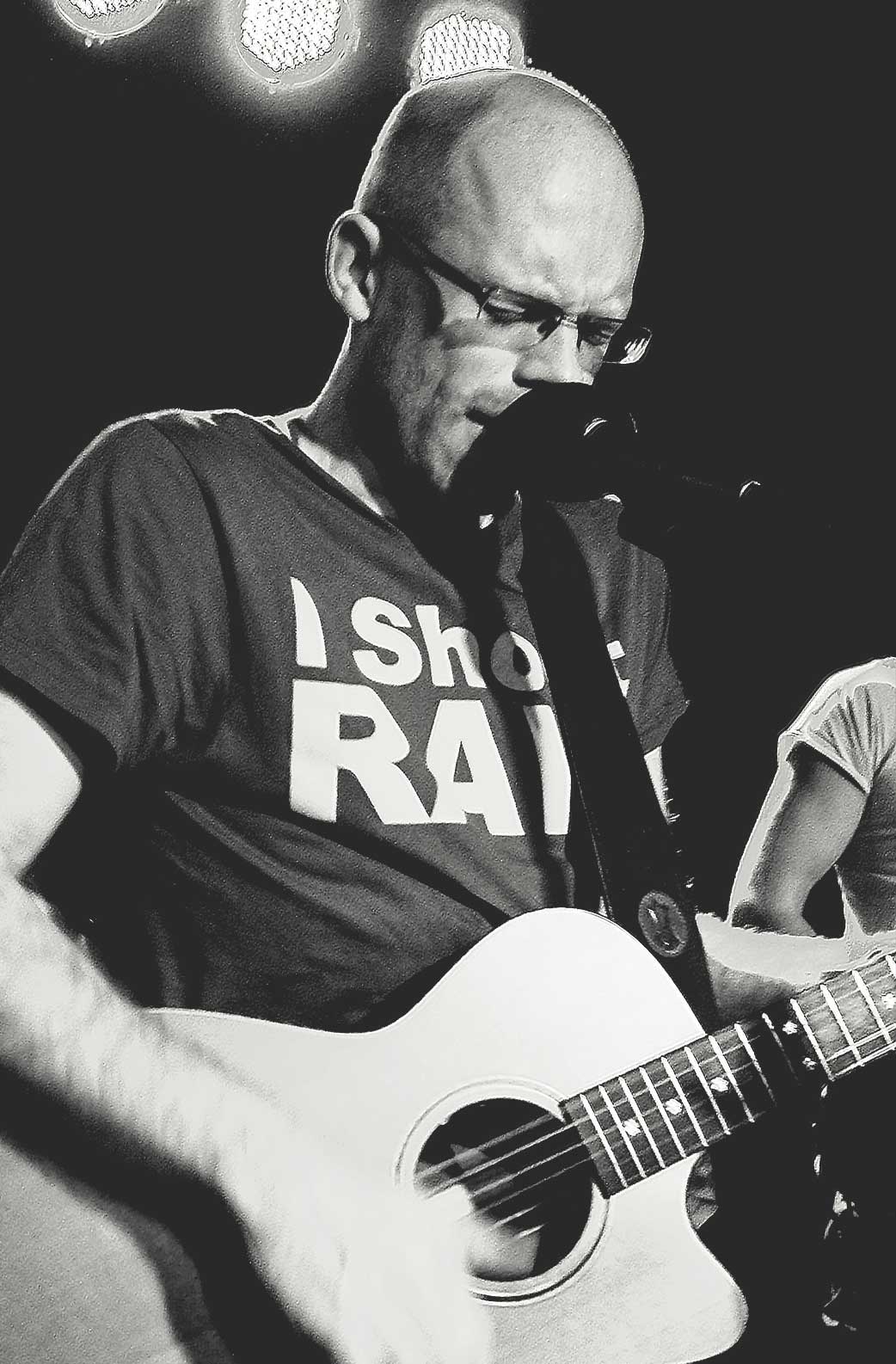

I love singing and playing acoustic guitar.

I spend my spare time with the rock band Rejected Papers. We do covers of various artists and occasionally play a gig in our local area. If you want to see us or book us for your event, feel free to contact me.

In addition, I have a YouTube channel where I upload acoustic folk songs and singer-songwriter covers from time to time. Feel free to subscribe, if you like my videos. Thanks!